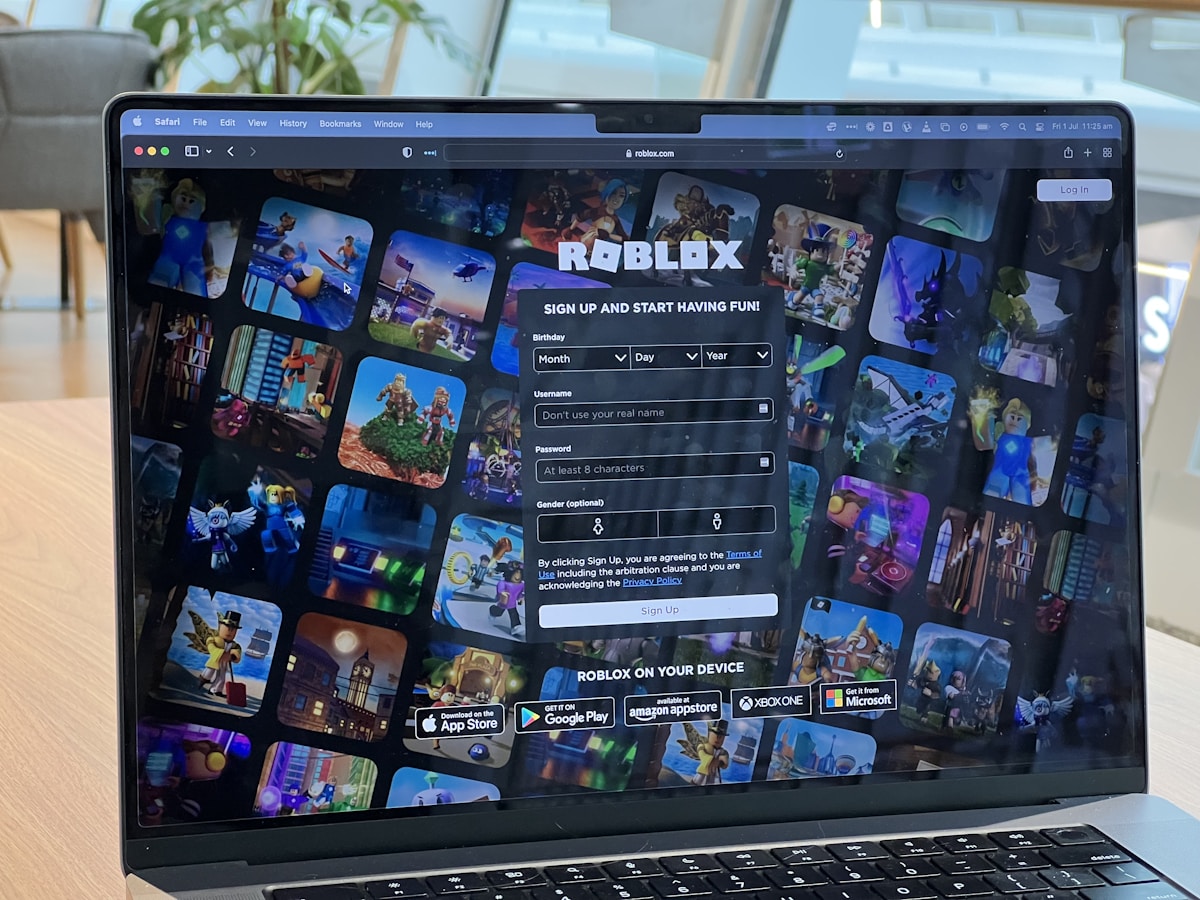

Roblox Introduces Open‑Source AI Moderation to Safeguard Young Players

Roblox, the global platform that lets millions of children create, share, and play immersive games, announced a major upgrade to its safety infrastructure this week. The company is deploying an open‑source artificial‑intelligence system designed to detect and block predatory language in real‑time chat, marking a decisive step toward protecting its youngest users from online abuse.

The new tool, dubbed Roblox SafeChat AI, builds on the firm’s existing moderation pipeline but adds a deep‑learning layer that can understand context, slang, and evolving threat patterns. Unlike traditional keyword filters, the AI can differentiate between harmless gaming jargon and covert attempts to solicit personal information or arrange off‑platform contact.

“Our community is built on imagination and collaboration, but we also have a responsibility to keep kids safe,” said David Baszucki, Roblox’s co‑founder and CEO, during the product launch. “By making the technology open source, we invite developers, researchers, and safety advocates to scrutinize, improve, and adapt the system for a rapidly changing digital landscape.”

Why Open Source Matters

Open‑sourcing the AI model serves three strategic purposes:

- Transparency: Stakeholders can examine the model’s decision‑making process, reducing concerns about hidden biases or over‑blocking.

- Community‑Driven Improvement: External contributors can submit enhancements, helping the system stay ahead of new slang, coded language, and regional dialects.

- Academic Collaboration: Researchers gain a real‑world dataset (anonymized and aggregated) to study online safety, fostering broader advancements in child‑protection technology.

How the System Works

SafeChat AI operates on a three‑stage pipeline:

- Pre‑filtering: A lightweight keyword and pattern scanner removes obvious profanity and known illicit phrases before they reach the AI model.

- Contextual Analysis: A transformer‑based neural network evaluates the surrounding conversation, identifying subtle cues such as grooming tactics, threats, or attempts to exchange personal details.

- Action Layer: When the model flags a message, it can trigger one of three responses—automatic deletion, a warning to the sender, or escalation to a human moderator for further review.

The model runs on Roblox’s edge servers, ensuring sub‑second latency even during peak traffic. To protect privacy, all processing occurs on‑device or within the company’s secure enclave, and no raw chat logs are stored long‑term.

Balancing Safety and Freedom of Expression

Critics of aggressive moderation argue that over‑zealous filters can stifle creativity and legitimate communication. Roblox addresses this tension by incorporating a confidence threshold that can be tuned per game or developer preference. Low‑risk environments, such as educational experiences, can adopt stricter settings, while social hubs that encourage free conversation may opt for a more permissive stance.

In addition, the platform offers an appeal mechanism. Users whose messages are mistakenly blocked can request a review, and the outcome feeds back into the model’s training data, creating a self‑correcting loop.

Future Roadmap

Roblox plans to extend SafeChat AI beyond text. Upcoming releases will incorporate voice‑chat analysis and image‑recognition capabilities to detect inappropriate visual content. The company also intends to publish regular transparency reports, detailing detection rates, false‑positive ratios, and the impact of community contributions.

By opening its AI moderation tools to the world, Roblox hopes to set a new industry standard for child safety in online gaming. As the digital playground continues to grow, the blend of advanced technology, open collaboration, and clear accountability may prove essential in keeping the experience enjoyable—and safe—for the next generation of creators.