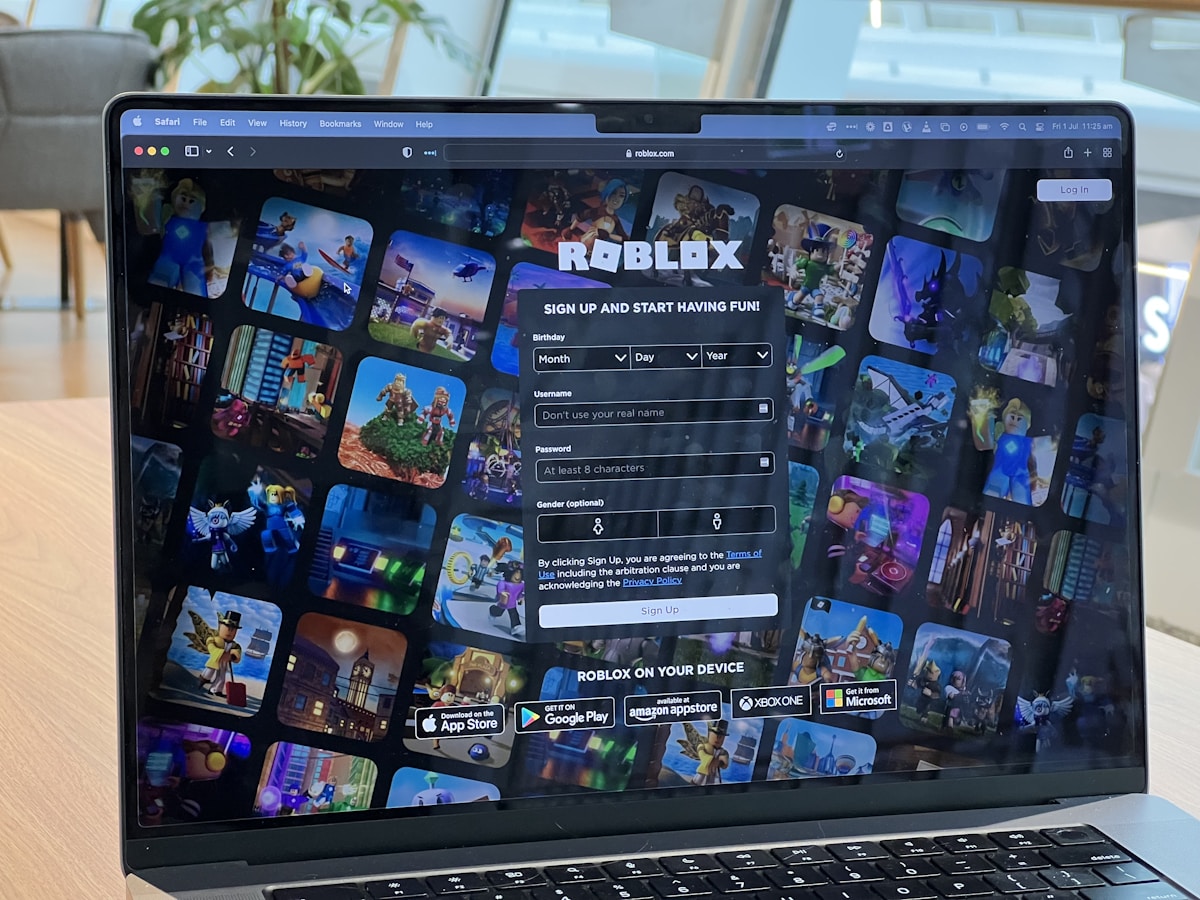

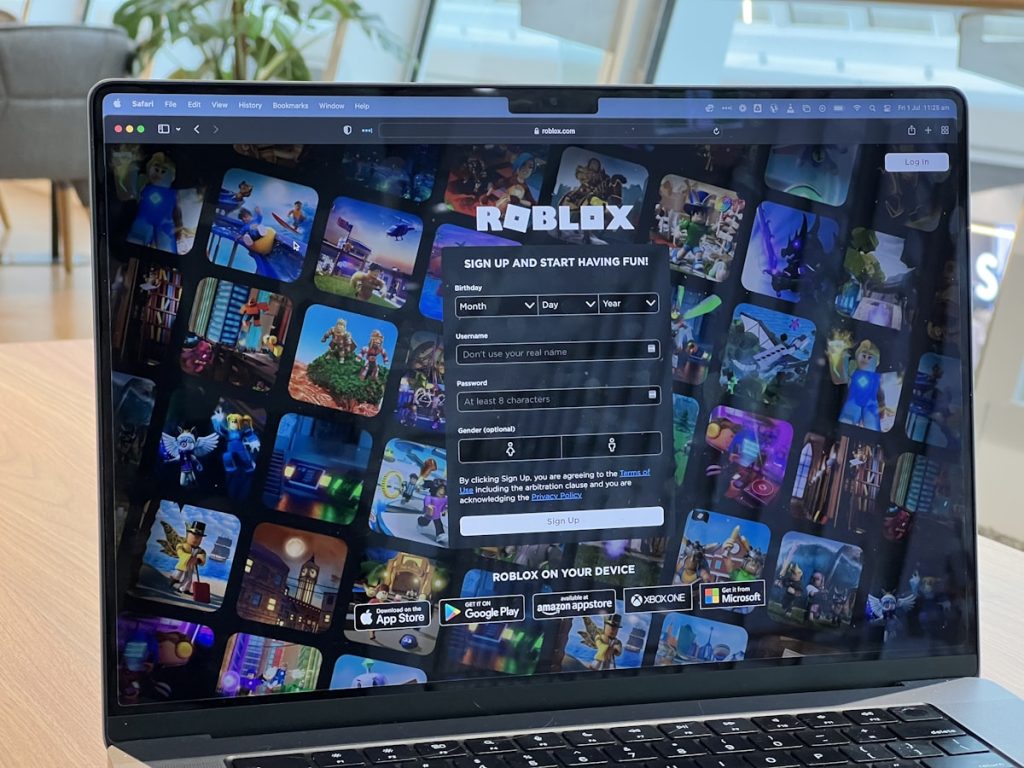

Roblox Launches Open-Source AI to Combat Predatory Behavior in Chats

Roblox, the global online platform hosting millions of user-generated games and social experiences, has unveiled a new open-source artificial intelligence (AI) system designed to protect minors from predatory behavior in chat interactions. The move comes amid growing concerns about child safety in digital spaces, particularly in platforms popular with younger audiences.

The Challenge: Safeguarding Young Users

With over 70 million daily active users—many under the age of 13—Roblox has faced scrutiny over potential risks in its chat features. Predators have historically exploited in-game communication tools to groom or exploit children, despite existing moderation efforts. Traditional keyword filtering and human moderation alone struggle to keep pace with evolving tactics, such as coded language or manipulative conversational patterns.

How the AI System Works

The new AI tool, developed by Roblox’s safety engineering team, employs advanced natural language processing (NLP) to detect harmful intent in real-time chats. Key features include:

- Contextual Analysis: Scans conversations for predatory patterns, such as grooming behaviors or attempts to move interactions off-platform

- Dynamic Learning: Adapts to new slang, misspellings, and evasive language used to bypass filters

- Risk Scoring: Flags high-risk messages for human moderators while automatically restricting suspicious accounts

Open-Source Approach for Wider Impact

By releasing the system as open-source, Roblox aims to foster collaboration across the tech industry. Developers and platforms can audit, modify, and implement the code to bolster safety measures. “Child safety shouldn’t be a competitive advantage,” said Roblox CEO David Baszucki. “Open-sourcing this tool accelerates protection for kids everywhere.”

Privacy and Transparency Considerations

Roblox emphasizes that the AI operates with strict privacy safeguards, analyzing text locally on devices before transmitting flagged content. The system complies with the Children’s Online Privacy Protection Act (COPPA) and excludes voice or video data from processing. Users retain control through parental controls and reporting tools.

Reactions from Safety Advocates

Child protection groups have welcomed the initiative. “Proactive use of AI to identify predatory behavior is a game-changer,” said Sarah Gardner, CEO of the nonprofit Protect Young Eyes. “However, continuous updates and human oversight remain critical, as predators constantly adapt.”

Challenges Ahead

While the AI marks a significant step forward, challenges persist. False positives could disrupt innocent interactions, and open-source access might inadvertently help bad actors study the system. Roblox has committed to quarterly updates and a bug bounty program to address vulnerabilities.

A New Standard for Online Safety?

Roblox’s decision to open-source its safety infrastructure sets a precedent for collaborative approaches to digital protection. As regulators worldwide push for stricter online safety laws, the initiative highlights how AI—when designed transparently—can complement human efforts to create safer virtual environments for children.